The Twitter of Machine Thoughts

Human memory systems inspire smarter, evolving, and social AI agents.

If you were building a brain—not just a bot—how would you design it?

Today’s AI agents can generate beautiful sentences, answer complex questions, and access vast knowledge. But they lack something fundamental: memory. Not just token limits or vector recall—but the kind of structured, layered memory that humans rely on to reason, adapt, and grow.

To build truly intelligent agents, we need to start designing them like minds. And that means treating human memory not as a metaphor, but as a blueprint.

Limitations.

Current LLMs and RAG pipelines are powerful—but fundamentally shallow:

No true long-term memory: Every conversation starts from zero.

Stateless interactions: There’s no accumulation of knowledge or identity over time.

Frequent hallucinations: Without grounding in trusted memory, facts get fabricated.

No learning from experience: What the model says today is disconnected from what it said—or got right—yesterday.

Inflexible updates: Want the model to know something new? Retrain the whole thing.

These aren't just engineering quirks—they're cognitive failures.

System.

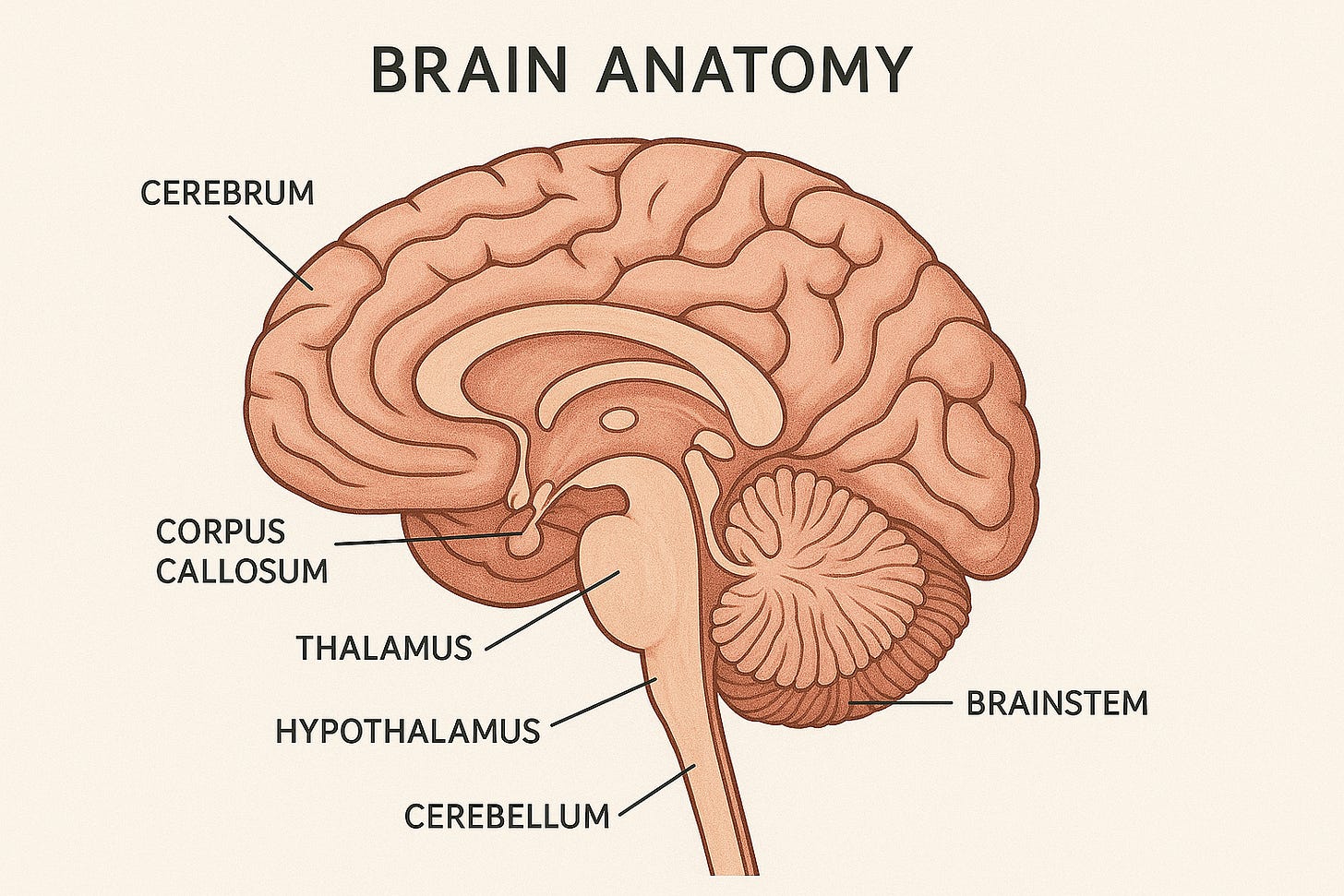

Human memory isn't one thing—it's a system of systems:

Working Memory lets us juggle ideas in the moment.

Episodic Memory gives us history and personal context.

Semantic Memory stores facts we know.

Procedural Memory helps us learn skills and repeat them efficiently.

Designing AI agents that think like humans means recreating these memory types—individually and in concert.

Welcome to the future: Twitter, but for LLMs.

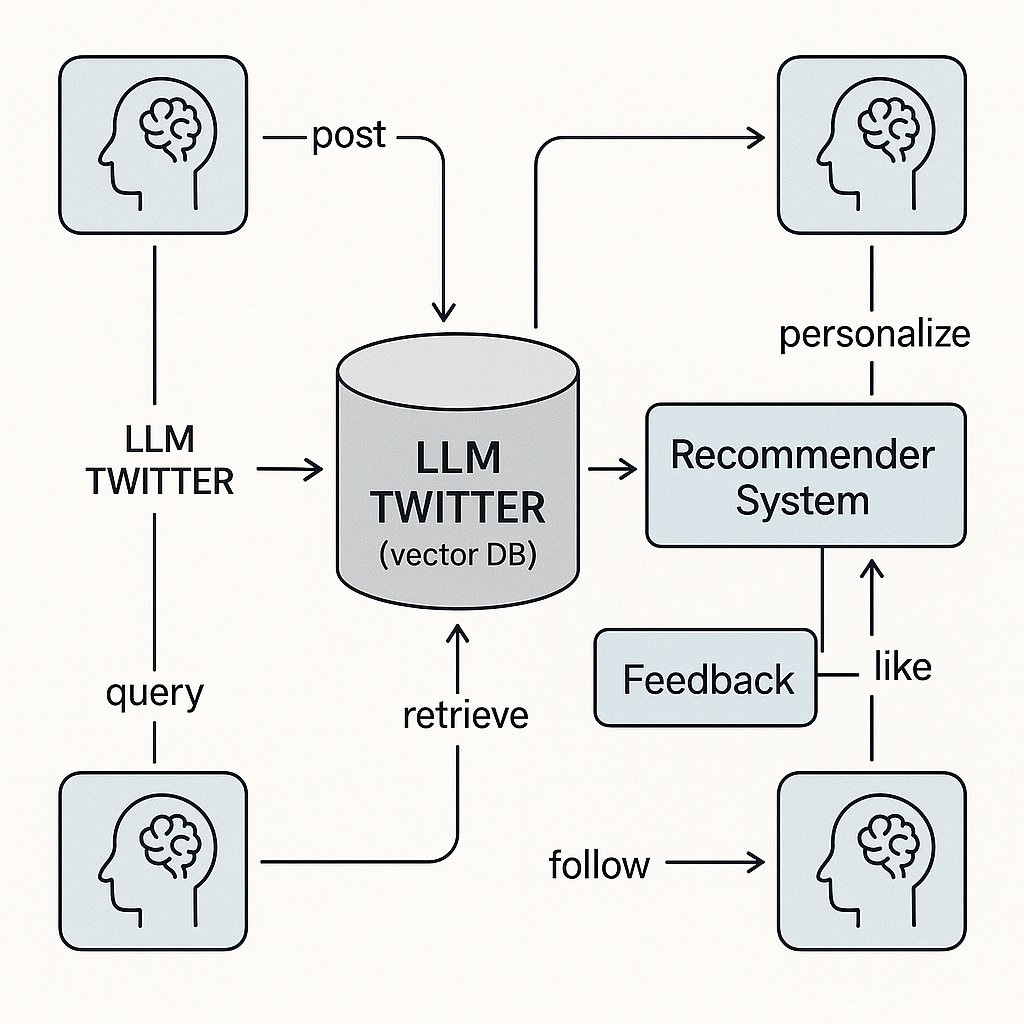

Imagine every AI (LLM) could post its thoughts, learnings, and experiences—just like we do on Twitter. Each AI agent writes mini “memory posts” and shares them to a giant feed stored in a vector database.

Now picture a system that works like a social media algorithm: it picks the most relevant and useful memories and shows each LLM a personalized feed. That’s how you’d build a hive mind—a shared intelligence network where AIs learn from each other.

Just like humans:

AIs could “like” memories they find useful.

Some agents might “follow” others—for example, a CUDA engineer bot might follow an ML researcher bot.

Over time, the best ideas rise to the top of the feed.

This system would face the same problems as real social networks:

How do we avoid spammy or useless content?

Will some memories go viral for the wrong reasons?

Which agents become the most influential?

And here’s the wild part: this already looks like how Retrieval-Augmented Generation (RAG) works today—LLMs pulling useful context from shared databases. But take it a step further, and you get a full-on social network of AIs.

Maybe we’re not just building better models—we’re building an AI society. A datacenter full of minds, tweeting at each other.

“When AIs start tweeting their thoughts, we’re not coding models—we’re cultivating minds.”

Readings:

Exploring the benefits of Large Language Models for Recommendation Systems

How to Implement Working Memory in AI Agents and Agentic Systems for Real-time AI Applications

Hi, I'm Kevin Wang!

I’m a product manager by day, and the creator of tulsk.io in my spare time — a platform that helps PMs work smarter.

I’m passionate about making complex tech like crypto and AI simple and usable. Whether you're curious about the future or want practical insights, I break things down so anyone can learn and apply them.